Machine Learning Weight Mean

The bigger the MAE the more critical the error is. These parameters are in turn all the parameters used in the layers of the model.

Neural Networks Bias And Weights Understanding The Two Most Important By Farhad Malik Fintechexplained Medium

Hidden layer 1 had mean -0000139 and std 0213260.

Machine learning weight mean. The idea of weight is a foundational concept in artificial neural networks. How over-fitting can be useful 1996. Even though research shows that aggregate stability and mean weight diameter MWD are critical components of soil health it is not routinely measured.

In this article learn more about what weighting is why you should and shouldnt use it and how to choose optimal weights to minimize business costs. Learning with ensembles. Weights are all the parameters including trainable and non-trainable of any model.

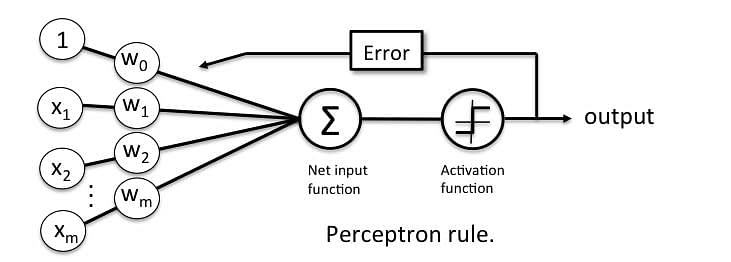

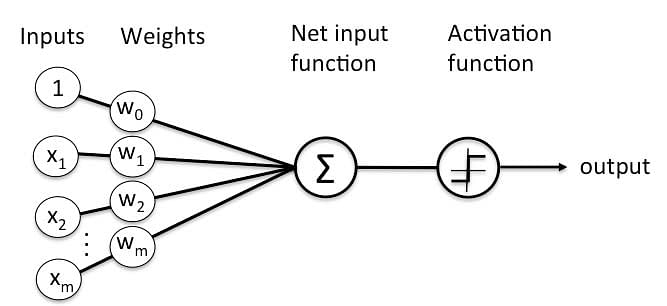

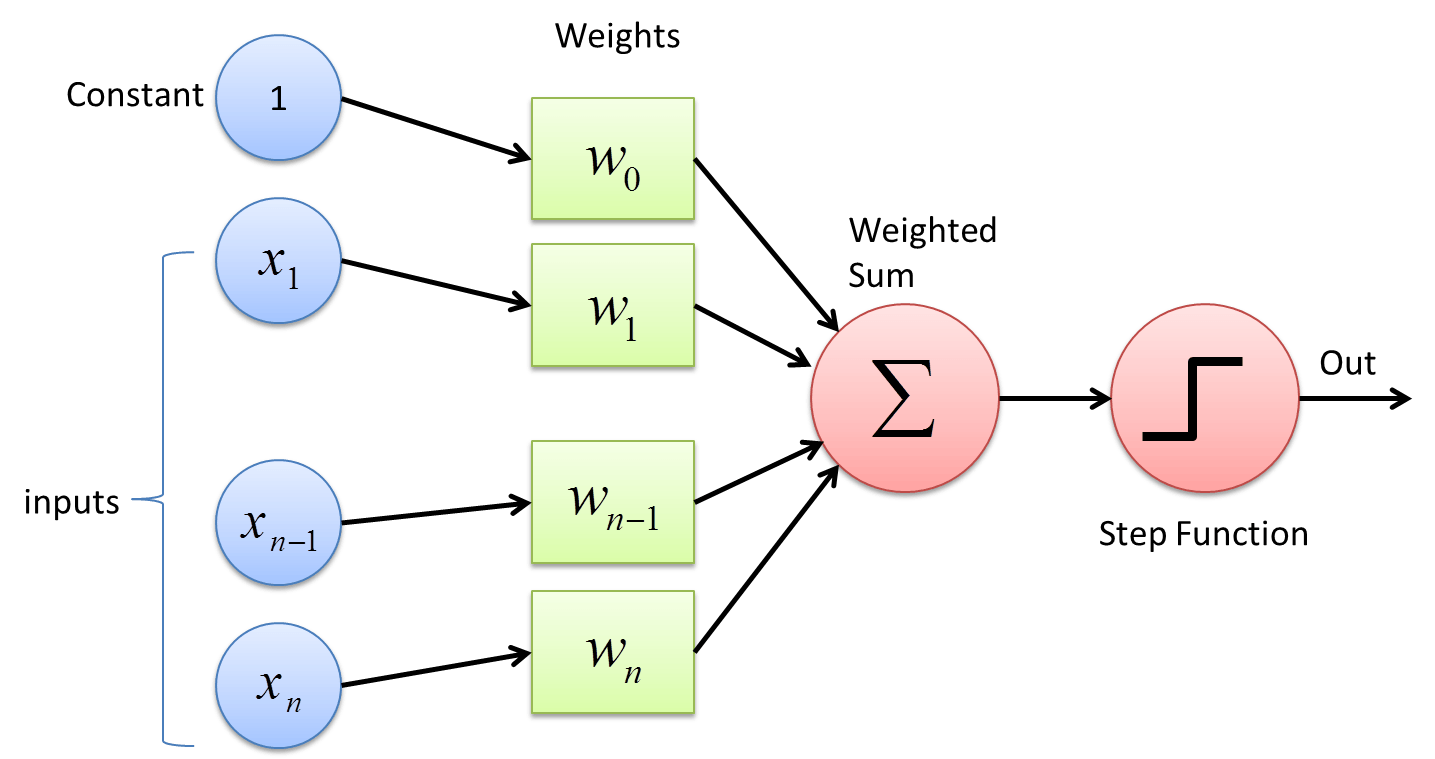

Weight is the parameter within a neural network that transforms input data within the networks hidden layers. Normally random distributed numbers do not work with deep learning weight initialization. Lets take a simple example.

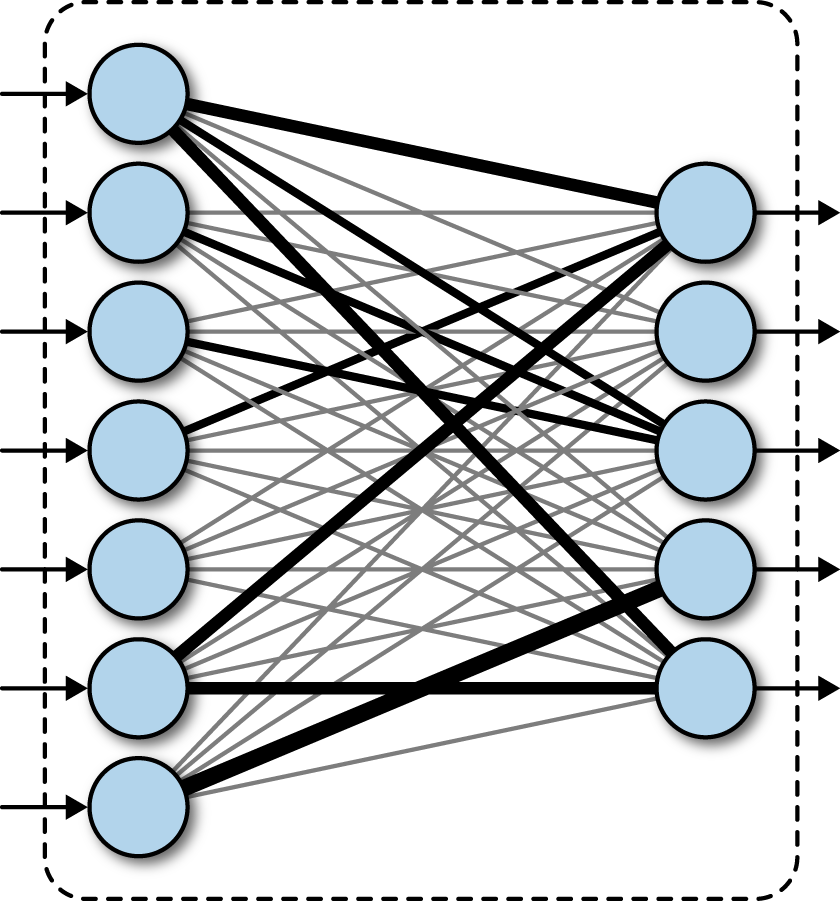

Professionals dealing with machine learning and artificial intelligence projects where artificial neural networks for similar systems are used often talk about weight as a function of both biological and technological systems. Weighting is a technique for improving models. Many algorithms will automatically set those weights to zero in order to simplify the network.

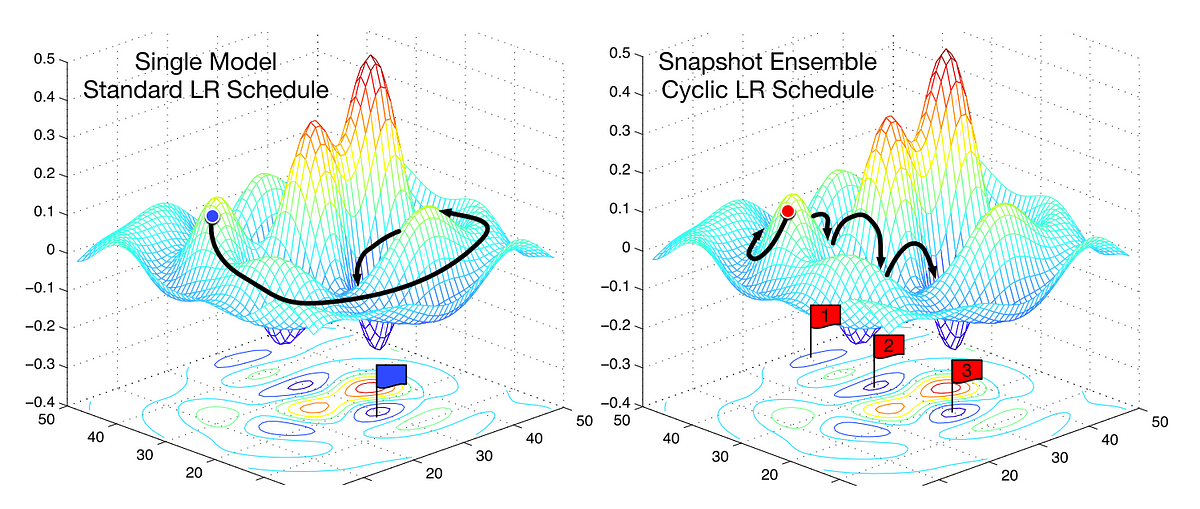

Weights near zero mean changing this input will not change the output. Training deep models is a sufficiently difficult task that most algorithms are strongly affected by the choice of initialization. Arithmetic mean of the score for each class weighted by the number of true instances in each class.

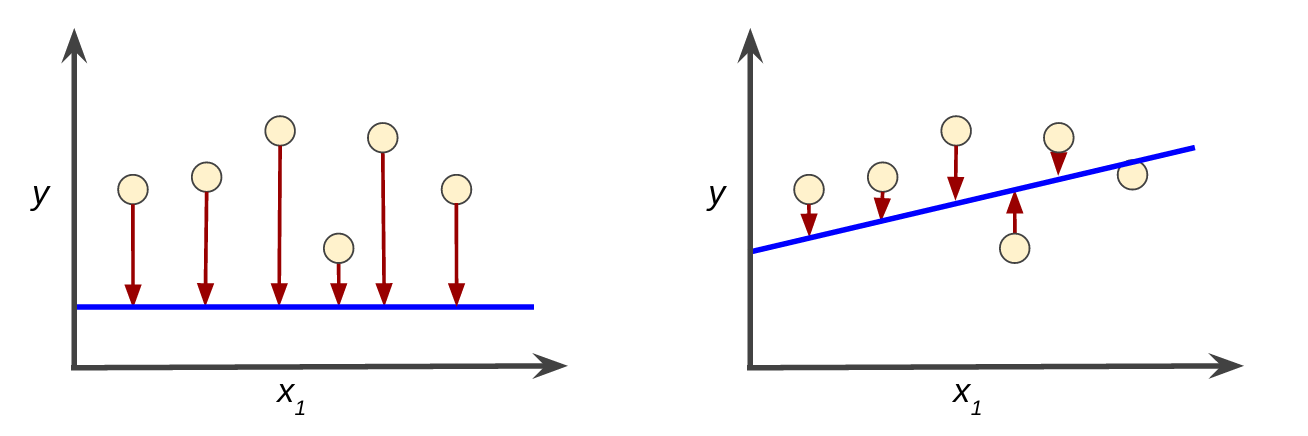

But for a convolution layer the weights are the filter weights and biases. The ranges of both features on their respective axes will be the same and the result will look like this. For example if you want to predict the value of mathymath as a function of mathx_1math and mathx_1math with a line.

In machine learning we call coefficients weights. In statistics x is referred to as an independent variable while machine learning calls it a feature. 1k where k is the number of ensemble members means that the weighted ensemble acts as a simple averaging ensemble.

W1 is the weight for the input variable x. X is the input variable. If I increase the input then how much influence does it have on the output.

The MAE unit is the same as the predicted variable unit ie a. Weight - Weight is the strength of the connection. MAE is the average of the absolute differences between the actual value and the models predicted value.

Therefore the objective was to compare two artificial intelligence AIbased machine learning approaches that is. An Azure Machine Learning experiment created with either. Im just starting to investigate machine learning concepts so Im sorry if this question is very naive but Im hoping that it will be an easy one to answer.

Uniform values for the weights eg. If you look at the sklearn documentation for logistic regression you can see that the fit function has an optional sample_weight parameter which is defined as an array of weights assigned to individual samples. Lets say that you want to create a model to predict the weight of a coin.

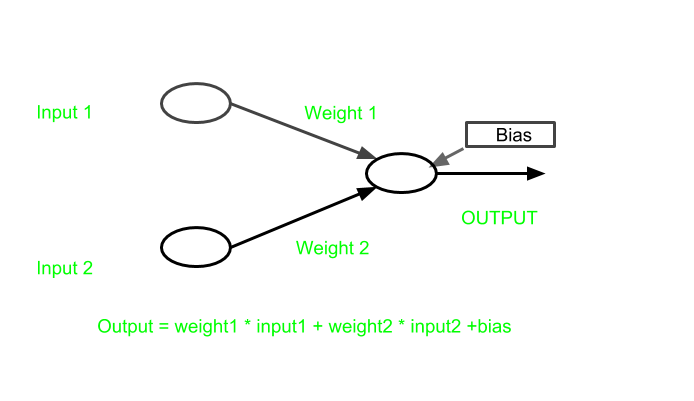

Within each node is a set of inputs weight and a bias value. Step 2 - Divide the variance. There will be three inputs to your machine learning model the diameter of the coin the thickness of the coin and the material that the coin is made of.

Machine-learning classification scikit-learn sample weights. An alternative approach to the physical measurement is to calculate these values based on routinely measured soil parameters. A set of weighted inputs allows each artificial neuron or node in the system to produce related outputs.

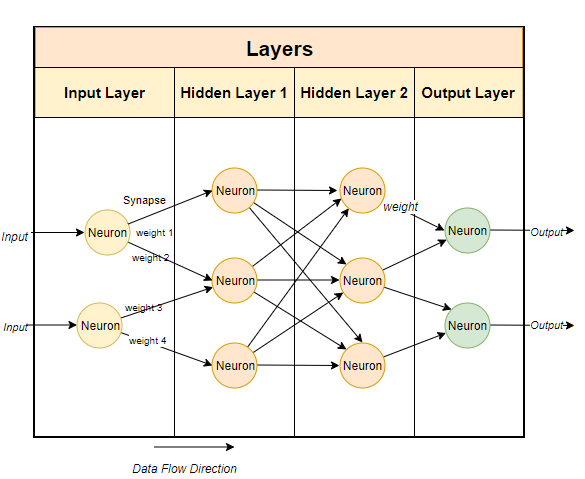

W0 is the bias term. The Azure Machine Learning studio. As an input enters the node it gets multiplied by a weight value and the resulting output is either observed or passed to the next layer in the neural network.

I have a document matching algorithm that individually calculates a match for each field 0-1 with 0 no match 1 100 match and applies a separate weight to each field match to be. Step 1 - Subtract the mean. A neural network is a series of nodes or neurons.

For every layer in. Generally in machine learning weights are multiplied with relevant inputsfeatures to obtain some prediction. A machine learning technique that iteratively combines a set of simple and not very accurate classifiers referred to as weak classifiers into a classifier with high accuracy a strong.

With the increase in recall from the previous threshold used as the weight. Effectively shifting the dataset so that it has zero mean. The coefficient for the Radio independent variable.

The mean of the dataset is calculated using the formula shown below and then is subtracted from each individual training example. It is the information that is given to us at any time both during training and predictions. Weight initialization is a procedure to set the weights of a neural network to small random values that define the starting point for the optimization learning or training of the neural network model.

One can think of the weight Wk as the belief in predictor k and we therefore constrain the weights to be positive and sum to one.

Large Width Limits Of Neural Networks Wikipedia

What Is Perceptron Simplilearn

10 Machine Learning Methods That Every Data Scientist Should Know By Jorge Castanon Towards Data Science

4 Fully Connected Deep Networks Tensorflow For Deep Learning Book

What Does Weight Mean In Terms Of Neural Networks Quora

Role Of Bias In Neural Networks Intellipaat

Gradient Descent And Stochastic Gradient Descent Mlxtend

Intuitively Understanding Convolutions For Deep Learning By Irhum Shafkat Towards Data Science

What Is Perceptron Simplilearn

Effect Of Bias In Neural Network Geeksforgeeks

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Weight Artificial Neural Network Definition Deepai

Demystifying Restricted Boltzmann Machines Aditya Sharma Data Science Ai Deep Learning

Hidden Layer Definition Deepai

Descending Into Ml Training And Loss Machine Learning Crash Course

Activation Functions In Neural Networks Geeksforgeeks

Weight Artificial Neural Network Definition Deepai

Post a Comment for "Machine Learning Weight Mean"