Bagging Machine Learning Ppt

This image was uploaded in a PPT designed by the SAS team. CS 2750 Machine Learning CS 2750 Machine Learning Lecture 23 Milos Hauskrecht miloscspittedu 5329 Sennott Square Ensemble methods.

Bagging And Boosting In Data Mining Carolina Ruiz Ppt Download

Focuses on the discovery of previously unknown properties on the data.

Bagging machine learning ppt. What are ensemble methods. Here idea is to create several subsets of data from training sample chosen randomly with replacement. Bagging Predictors LEO BREIMAN leostatberkeleyedu Statistics Department University of California Berkeley CA 94720 Editor.

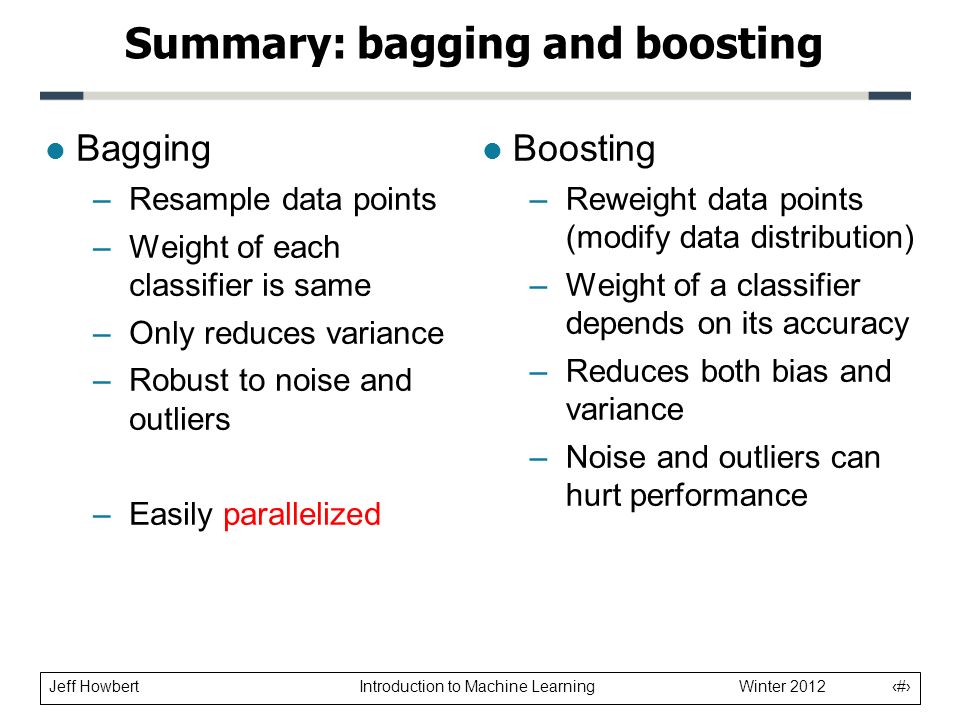

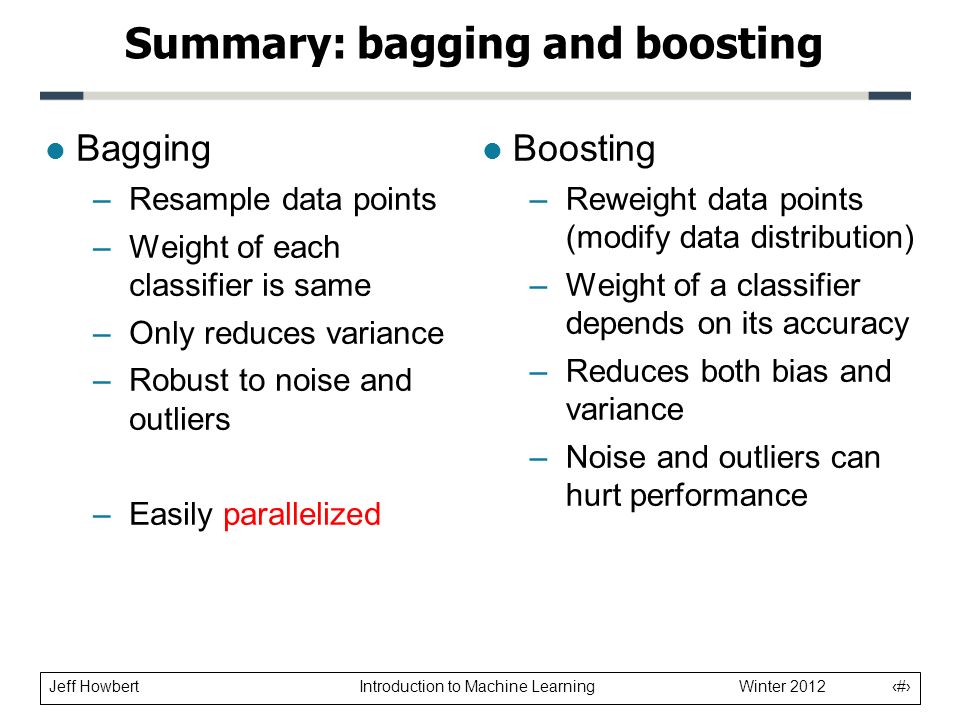

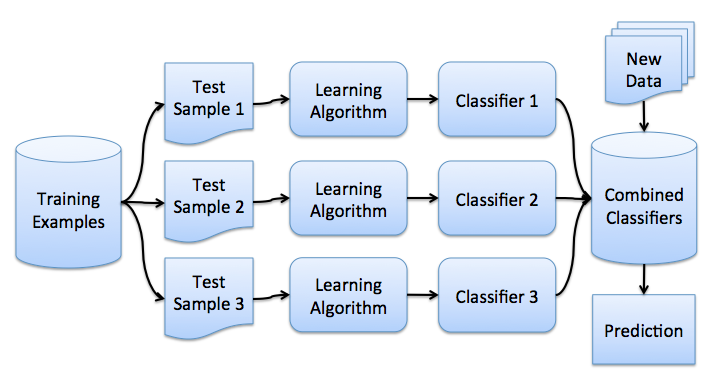

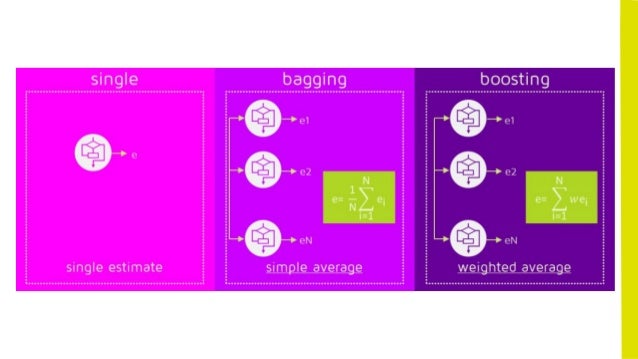

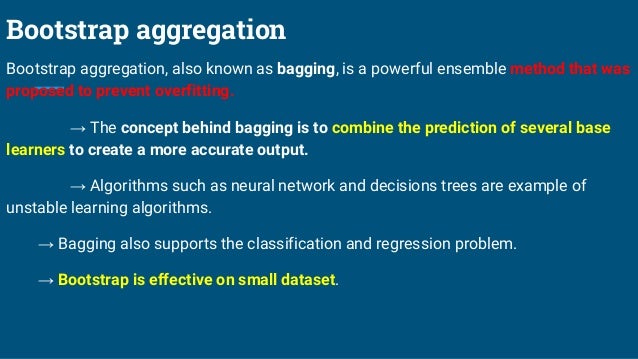

Classifier consisting of a collection of tree-structure classifiers. The meta-algorithm which is a special case of the model averaging was originally designed for classification and is usually applied to decision tree models but it can be used with any type of model for classification or regression. BaggingBreiman 1996 a name derived from bootstrap aggregation was the first effective method of ensemble learning and is one of the simplest methods of arching 1.

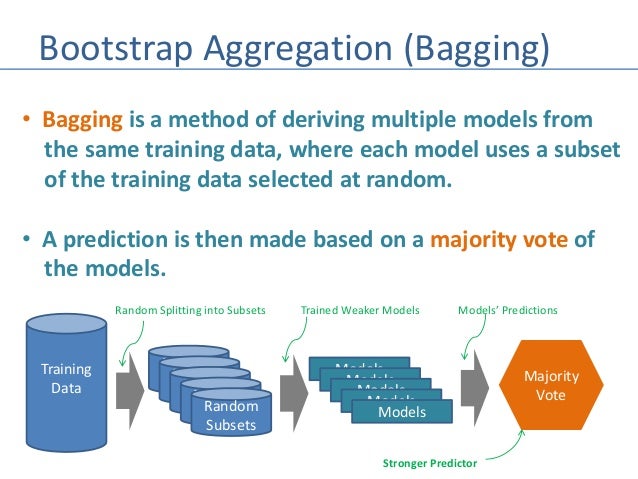

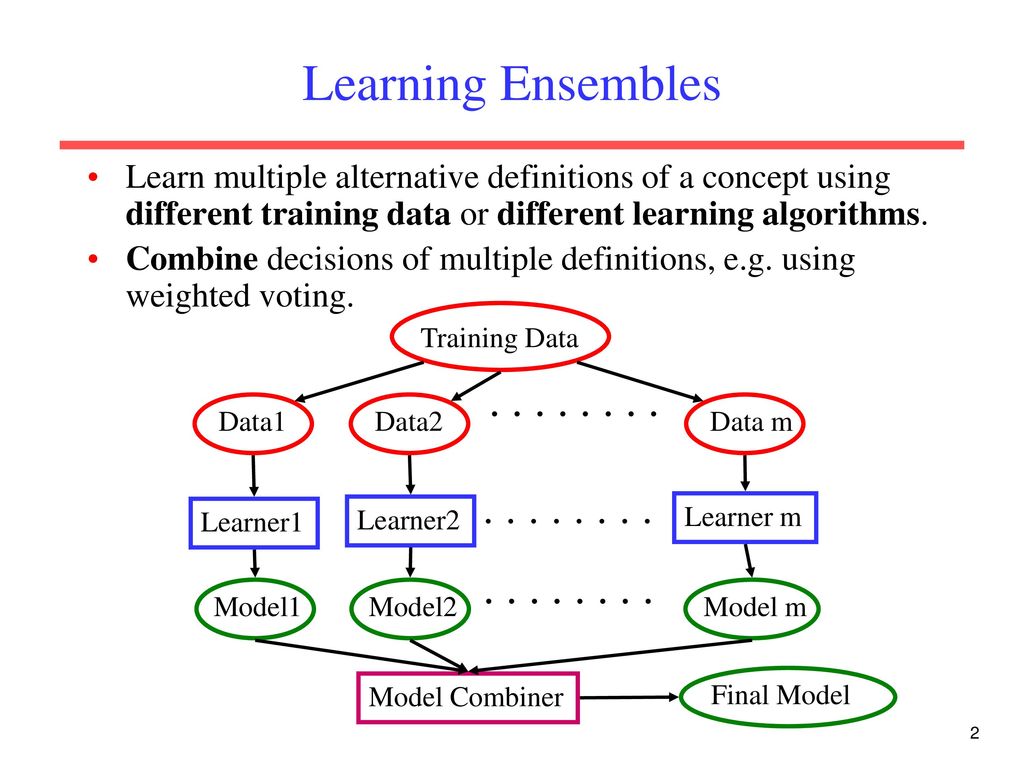

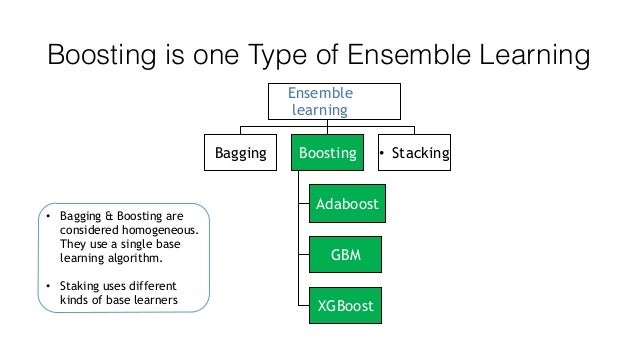

Ensemble Learning Bagging Boosting Stacking and Cascading Classifiers in Machine Learning using SKLEARN and MLEXTEND libraries. Bagging Bootstrap Aggregation is used when our goal is to reduce the variance of a decision tree. Ensemble methods improve model precision by using a group of models which when combined outperform individual models when used separately.

Performance is usually evaluated with respect to the ability to reproduce known knowledge. As a result we end up with an ensemble of different models. Reports due on Wednesday April 21 2004 at 1230pm.

Ensemble learning is a machine learning paradigm where multiple models often called weak learners are trained to solve the same problem and combined to get better results. Now each collection of subset data is used to prepare their decision trees thus we end up with an ensemble of various models. Bootstrap Aggregation famously knows as bagging is a powerful and simple ensemble method.

Bagging and Boosting 3 Ensembles. Machine Learning project Team members. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

Here the concept is to create a few subsets of data from the training sample which is chosen randomly with replacement. Manufactured in The Netherlands. The main hypothesis is that when weak models are correctly combined we can obtain more accurate andor robust models.

Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting. Machine Learning Project 1. Each tree grown with a random vector Vk where k 1L are independent and statistically distributed.

The key task is the discovery of. Choose an Unstable Classifier for Bagging. PowerPoint 簡報 Last modified by.

Bagging - Variants Random Forests A variant of bagging proposed by Breiman Its a general class of ensemble building methods using a decision tree as base classifier. The success of machine learning system also depends on the algorithms. LBREIMAN MACHINE LEARNING 262 P123-140 1996.

Bagging Bagging is used when our objective is to reduce the variance of a decision tree. Now each collection of subset data is used to train their decision trees. Another Approach Instead of training di erent models on same data trainsame modelmultiple times ondi erent.

Jack Harry Abhishek 2. Support vector machine SVM. Presentations on Wednesday April 21 2004 at 1230pm.

Predicting Quote conversion - Homesite sells Home-insurance to home buyers in United States - Insurance quotes are offered to customers based on several factors What Homesite knows - Customers geographical personal Financial Information HomeOwnership. Machine learning and data mining MACHINE LEARNING DATA MINING Focuses on prediction based on known properties learned from the training data. Bagging and Boosting CS 2750 Machine Learning Administrative announcements Term projects.

Machine Learning CS771A Ensemble Methods. Ensemble learning is a machine learning technique in which multiple weak learners are trained to solve the same problem and after training the learners they are combined to get more accurate and efficient results. Bagging bootstrap aggregation Adaboost Random forest.

Ppt Bagging And Boosting Classifiers Powerpoint Presentation Free To View Id F5fad Zdc1z

Classification Ensemble Methods 1 Ppt Video Online Download

Ppt Ensemble Learning Powerpoint Presentation Free Download Id 2402964

Bagging With Titanic Dataset Kaggle

Understanding Bagging And Boosting

What Is The Difference Between Bagging And Boosting In Machine Learning Quora

Understanding Bagging And Boosting

Machine Learning Ensemble Methods

Random Forest Classifier In Machine Learning Palin Analytics

Ppt Ensemble Methods Bagging And Boosting Powerpoint Presentation Free Download Id 1251329

Machine Learning Ensemble Methods Ppt Download

Ensemble Learning Bagging Boosting And Stacking And Other Topics Ppt Video Online Download

Lecture 18 Bagging And Boosting Ppt Download

Machine Learning Ensemble Methods Ppt Video Online Download

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Ppt Bagging Powerpoint Presentation Free Download Id 3944570

Boosting Algorithms Omar Odibat

Post a Comment for "Bagging Machine Learning Ppt"