Machine Learning Recurrent Batch Normalization

One obstacle is known as the vanishingexploding gradient problem. Amodei et al 2015.

Normalization In Deep Learning Give It A Read Data Science And Machine Learning Kaggle

Looking forward I think we can safely say that Machine Learning will become a standard tool in the toolbox of digital amp modeling.

Machine learning recurrent batch normalization. No you cannot use Batch Normalization on a recurrent neural network as the statistics are computed per batch this does not consider the recurrent part of the network. It can be used with most network types such as Multilayer Perceptrons Convolutional Neural Networks and Recurrent Neural Networks. Whereas previous works only apply batch normalization to the input-to-hidden transformation of RNNs we demonstrate that it is both possible and beneficial to batch-normalize.

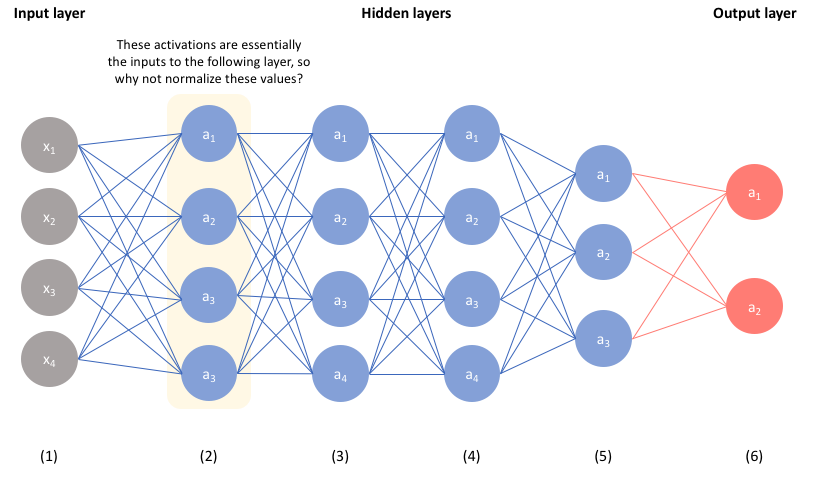

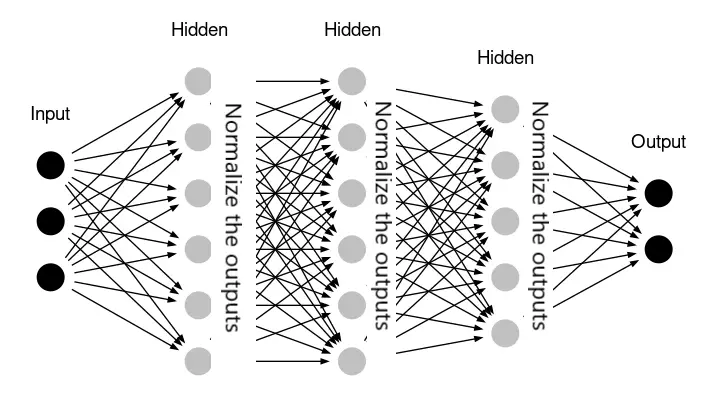

Deep Learning in particular is almost perfectly suited for the challenge of jointly optimizing an end-to-end non-linear system on unstructured data. Batch normalization is applied to individual layers optionally to all of them and works as follows. This is a well known problem that commonly occurs in Recurrent Neural Networks RNNs.

In this project we explore the application of Batch Normalization to recurrent neural networks for the task of language modeling. As a result of normalizing the activations of the network increased learning rates may be. Orthogonal Recurrent Neural Networks and Batch Normalization in Deep Neural Networks Despite the recent success of various machine learning techniques there are still numerous obstacles that must be overcome.

Are you working with medical images. Batch normalization is a powerful regularization technique that decreases training time and improves performance by addressing internal covariate shift that occurs during training. This problem refers to gradients that either become zero or unbounded.

Probably Use Before the Activation. Weights are shared in an RNN and the activation response for each recurrent loop might have completely different statistical properties. We propose a reparameterization of LSTM that brings the benefits of batch normalization to recurrent neural networks.

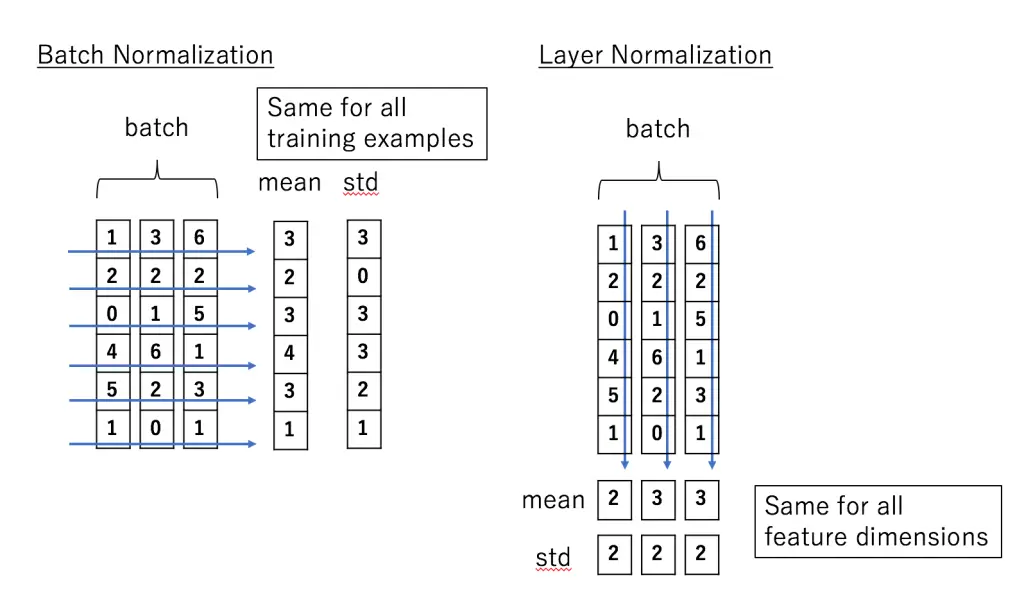

Batch normalization is a general technique that can be used to normalize the inputs to a layer. In each training iteration we first normalize the inputs of batch normalization by subtracting their mean and dividing by their standard deviation where both are estimated based on. Recently some early success of applying Batch.

This problem refers to gradients that either become zero or unbounded. In this work we describe how. Batch normalization just takes all the outputs from L1 ie.

One obstacle is known as the vanish-ingexploding gradient problem. Then take care of batch normalization layers since it can decrease the performance of your modelAlmendra is a wor. Tim Cooijmans Nicolas Ballas César Laurent Çağlar Gülçehre Aaron Courville.

Despite the recent success of various machine learning techniques there are still numerous obstacles that must be overcome. Every single output from every single neuron getting an overall vector of L1 X L2 numbers for a fully connected network normalizes them to have a mean of 0 and SD of 1 and then feeds them to their respective neurons in L2 plus applying the linear transformation of gamma and beta they were discussing in the paper. Batch normalization was used to standardize intermediate representations by approximating the population statistics using sample-based approximations obtained from small subsets of the data often called mini-batches that are also used to obtain gradient approximations for stochastic gradient descent the most commonly used optimization method for neural network training.

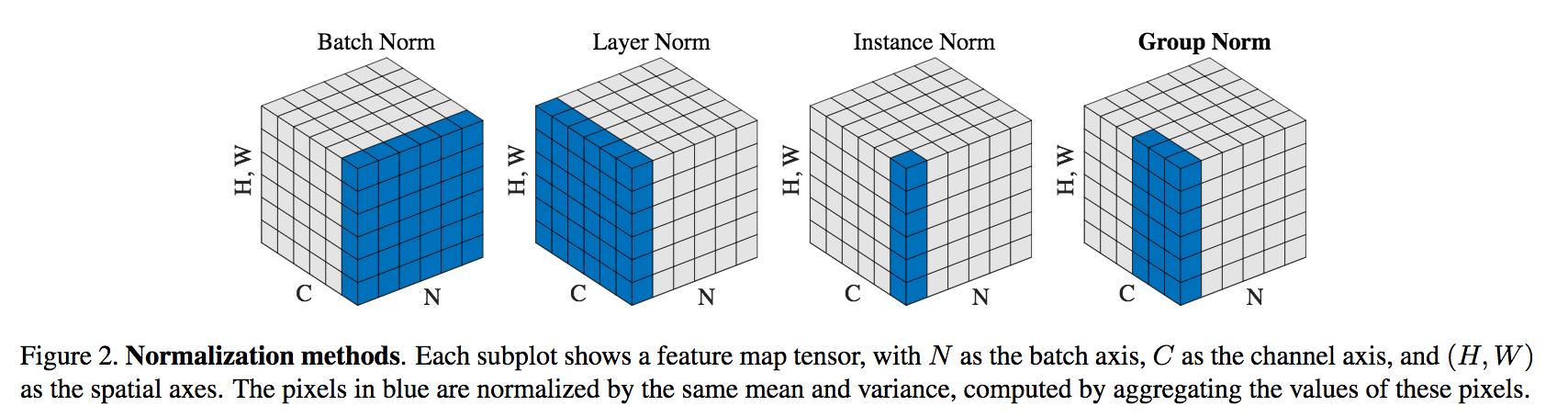

TitleRecurrent Batch Normalization. Batch normalization is dependent on mini-batch size which means if the mini-batch size is small it will have little to no effect If there is no batch size involved like in traditional gradient descent learning we cannot use it at all. Batch Normalization has been shown to have significant benefits for feed-forward networks in terms of training time and model performance.

Although batch normalization has demonstrated significant training speed-ups and generalization benefits in feed-forward networks it is proven to be difficult to apply in recurrent architectures Laurent et al 2016.

In Layer Normalization Techniques For Training Very Deep Neural Networks Ai Summer

Multi Layer Feature Concatenation With Batch Normalization Download Scientific Diagram

Normalizing Your Data Specifically Input And Batch Normalization

Curse Of Batch Normalization Batch Normalization Is Indeed One Of By Sahil Uppal Towards Data Science

Pdf Convergence Analysis Of Batch Normalization For Deep Neural Nets Semantic Scholar

How To Accelerate Learning Of Deep Neural Networks With Batch Normalization

Keras Normalization Layers Batch Normalization And Layer Normalization Explained For Beginners Mlk Machine Learning Knowledge

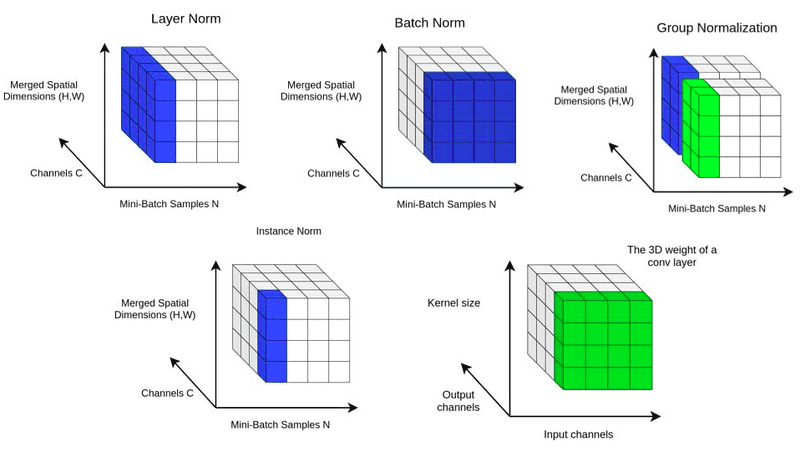

Facebook Ai Proposes Group Normalization Alternative To Batch Normalization By Synced Syncedreview Medium

How To Accelerate Learning Of Deep Neural Networks With Batch Normalization Signal Surgeon

Keras Normalization Layers Batch Normalization And Layer Normalization Explained For Beginners Mlk Machine Learning Knowledge

Normalization Techniques In Deep Neural Networks By Aakash Bindal Techspace Medium

Batchnormalization Is Not A Norm By Prateek Gulati Towards Data Science

Keras Normalization Layers Batch Normalization And Layer Normalization Explained For Beginners Mlk Machine Learning Knowledge

Structure Of The Deep Learning Model A Convolutional Neural Networks Download Scientific Diagram

Curse Of Batch Normalization Batch Normalization Is Indeed One Of By Sahil Uppal Towards Data Science

Normalization In Deep Learning Arthur Douillard

Structure Of The Deep Learning Model A Convolutional Neural Networks Download Scientific Diagram

Can We Use Batch Normalisation Technique For Nlp Application

Diagram Of The Four Recurrent Neural Network Rnn Architectures Download Scientific Diagram

Post a Comment for "Machine Learning Recurrent Batch Normalization"